本文主要分享 K8s Operator 开发中的本地调试环节,鉴于复杂 Operator 开发难以一蹴而就,调试操作频繁,传统构建、推送、重启服务流程繁琐,因此着重分享在本地环境连接线上 K8s 集群进行开发调试的方法。

上一篇文章 K8s Operator 开发 Part1:Kubebuilder 初体验 分享了 K8s Operator 开发全过程。

但是对于一个复杂的 Operator 来说,写代码不可能一步到位,调试是不可避免的,但不可能每次为了调试一两行代码或加个打印输出,重复进行构建镜像、推送镜像、重启服务这一系列繁杂的操作。

因此本篇主要分享开发过程中,如何在本地环境连接线上 K8s 集群进行开发调试,大概是这样的:

1.环境准备

创建集群

要调试 Operator,当前是要先有一个 K8s 集群了,这里使用 KubeClipper 部署一个,参考文章:使用 KubeClipper 通过一条命令快速创建 k8s 集群

本地连接集群

集群准备好后将 Kubeconfig 复制到本地写入 ~/.kube/config 文件,同时在本地安装 kubectl,验证下,本地可以正常使用 kubectl 命令,就像这样:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

❯ kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

calico-apiserver calico-apiserver-6f86f48f4b-cw7nw 1/1 Running 2 ( 6d5h ago) 7d22h

calico-apiserver calico-apiserver-6f86f48f4b-mww2r 1/1 Running 2 ( 6d5h ago) 7d22h

calico-system calico-kube-controllers-5f8646f489-8lpms 1/1 Running 0 7d22h

calico-system calico-node-295tr 1/1 Running 0 7d22h

calico-system calico-typha-759985f586-q9dwp 1/1 Running 0 7d22h

calico-system csi-node-driver-bpmd5 2/2 Running 0 7d22h

calico-system tigera-operator-5f4668786-dj2th 1/1 Running 1 ( 6d5h ago) 7d22h

default app-demo-86b66c84cd-4947h 1/1 Running 0 5d23h

kube-system coredns-5d78c9869d-krwzb 1/1 Running 0 7d22h

kube-system coredns-5d78c9869d-ppx2c 1/1 Running 0 7d22h

kube-system etcd-bench 1/1 Running 0 7d22h

kube-system kc-kubectl-78c9594489-pd6gw 1/1 Running 0 7d22h

kube-system kube-apiserver-bench 1/1 Running 0 7d22h

kube-system kube-controller-manager-bench 1/1 Running 2 ( 6d5h ago) 7d22h

kube-system kube-proxy-4x99q 1/1 Running 0 7d22h

kube-system kube-scheduler-bench 1/1 Running 2 ( 6d5h ago) 7d22h

对于没有 Webhook 的 Operator,现在就满足本地调试的条件了,但是如果有 Webhook 则还需要额外配置。

2.Controller 调试

生成 Manifests

执行 make manifests 命令,会根据我们定义的 CRD 生成对应的 yaml 文件,以及其他部署相关的 yaml 文件:

1

2

❯ make manifests

/Users/lixueduan/17x/projects/i-operator/bin/controller-gen rbac:roleName= manager-role crd webhook paths = "./..." output:crd:artifacts:config= config/crd/bases

生成的 crd 如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

---

apiVersion : apiextensions.k8s.io/v1

kind : CustomResourceDefinition

metadata :

annotations :

controller-gen.kubebuilder.io/version : v0.16.4

name : applications.core.crd.lixueduan.com

spec :

group : core.crd.lixueduan.com

names :

kind : Application

listKind : ApplicationList

plural : applications

singular : application

scope : Namespaced

versions :

- name : v1

schema :

openAPIV3Schema :

description : Application is the Schema for the applications API.

properties :

apiVersion :

description : |-

APIVersion defines the versioned schema of this representation of an object.

Servers should convert recognized schemas to the latest internal value, and

may reject unrecognized values.

More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources

type : string

kind :

description : |-

Kind is a string value representing the REST resource this object represents.

Servers may infer this from the endpoint the client submits requests to.

Cannot be updated.

In CamelCase.

More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds

type : string

metadata :

type : object

spec :

description : ApplicationSpec defines the desired state of Application.

properties :

enabled :

type : boolean

image :

type : string

type : object

status :

description : ApplicationStatus defines the observed state of Application.

properties :

ready :

type : boolean

type : object

type : object

served : true

storage : true

subresources :

status : {}

我们定义的 Spec 和 Status 部分如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

spec :

description : ApplicationSpec defines the desired state of Application.

properties :

enabled :

type : boolean

image :

type : string

type : object

status :

description : ApplicationStatus defines the observed state of Application.

properties :

ready :

type : boolean

这里我们可以检查生成 CRD 和预想是否一致,或者生成过程中是否有报错等信息。

没问题就可以进行下一步。

部署 CRD 到集群

执行 make install 命令即可将 CRD 部署到集群,这也就是为什么需要在本地准备好 Kubeconfig 以及 kubectl 工具。

1

2

3

4

5

6

7

8

9

10

❯ make install

/Users/lixueduan/17x/projects/i-operator/bin/controller-gen rbac:roleName= manager-role crd webhook paths = "./..." output:crd:artifacts:config= config/crd/bases09:58:14

Downloading sigs.k8s.io/kustomize/kustomize/v5@v5.5.0

go: downloading sigs.k8s.io/kustomize/kustomize/v5 v5.5.0

go: downloading sigs.k8s.io/kustomize/api v0.18.0

go: downloading sigs.k8s.io/kustomize/cmd/config v0.15.0

go: downloading sigs.k8s.io/kustomize/kyaml v0.18.1

go: downloading k8s.io/kube-openapi v0.0.0-20231010175941-2dd684a91f00

/Users/lixueduan/17x/projects/i-operator/bin/kustomize build config/crd | kubectl apply -f -

customresourcedefinition.apiextensions.k8s.io/applications.core.crd.lixueduan.com created

本地启动 Controller

执行 make run 命令即可在本地运行 Controller,这也就是为什么需要在本地准备好 kubeconfig 和 kubectl 文件。

1

2

3

4

5

6

7

8

9

❯ make run

/Users/lixueduan/17x/projects/i-operator/bin/controller-gen rbac:roleName= manager-role crd webhook paths = "./..." output:crd:artifacts:config= config/crd/bases10:00:30

/Users/lixueduan/17x/projects/i-operator/bin/controller-gen object:headerFile= "hack/boilerplate.go.txt" paths = "./..."

go fmt ./...

go vet ./...

go run ./cmd/main.go

2024-12-19T10:03:35+08:00 INFO setup starting manager

2024-12-19T10:03:35+08:00 INFO starting server { "name" : "health probe" , "addr" : "[::]:8081" }

2024-12-19T10:03:35+08:00 INFO Starting EventSource { "controller" : "application" , "controllerGroup" : "core.crd.lixueduan.com" , "controllerKind" : "Application" , "source" : "kind source: *v1.Application" }

这样本地运行可以比较方便的调试 Controller,当然了也可以直接以 Debug 方式启动,打断点进行调试。

3.Webhook 调试

如果创建了 Webhook,再想本地调试就稍微有点麻烦了,需要多改一下配置。

不过也不是不行,这里给大家分享一种修改比较少的方法:自定义 Endpoints 方式。

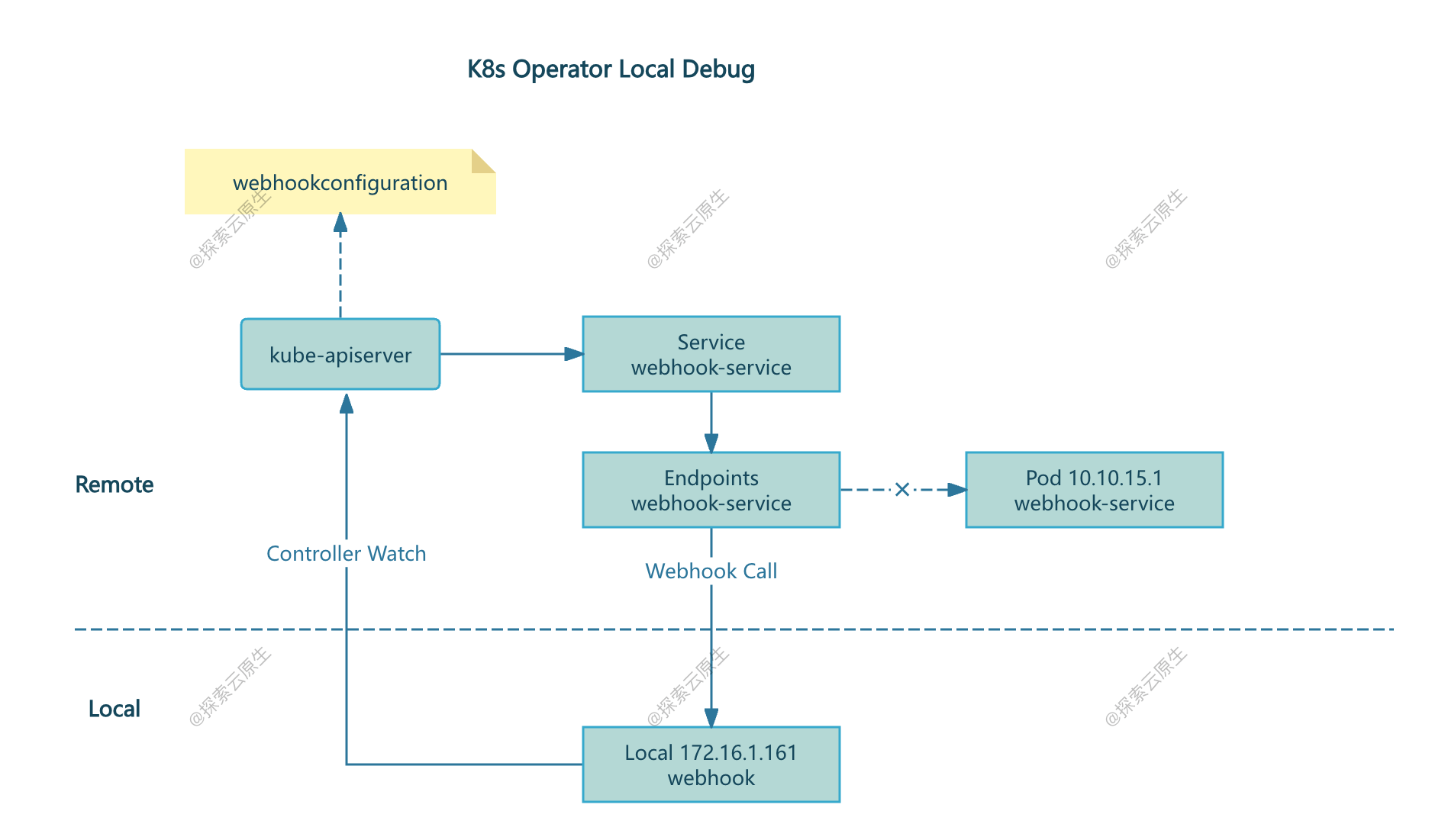

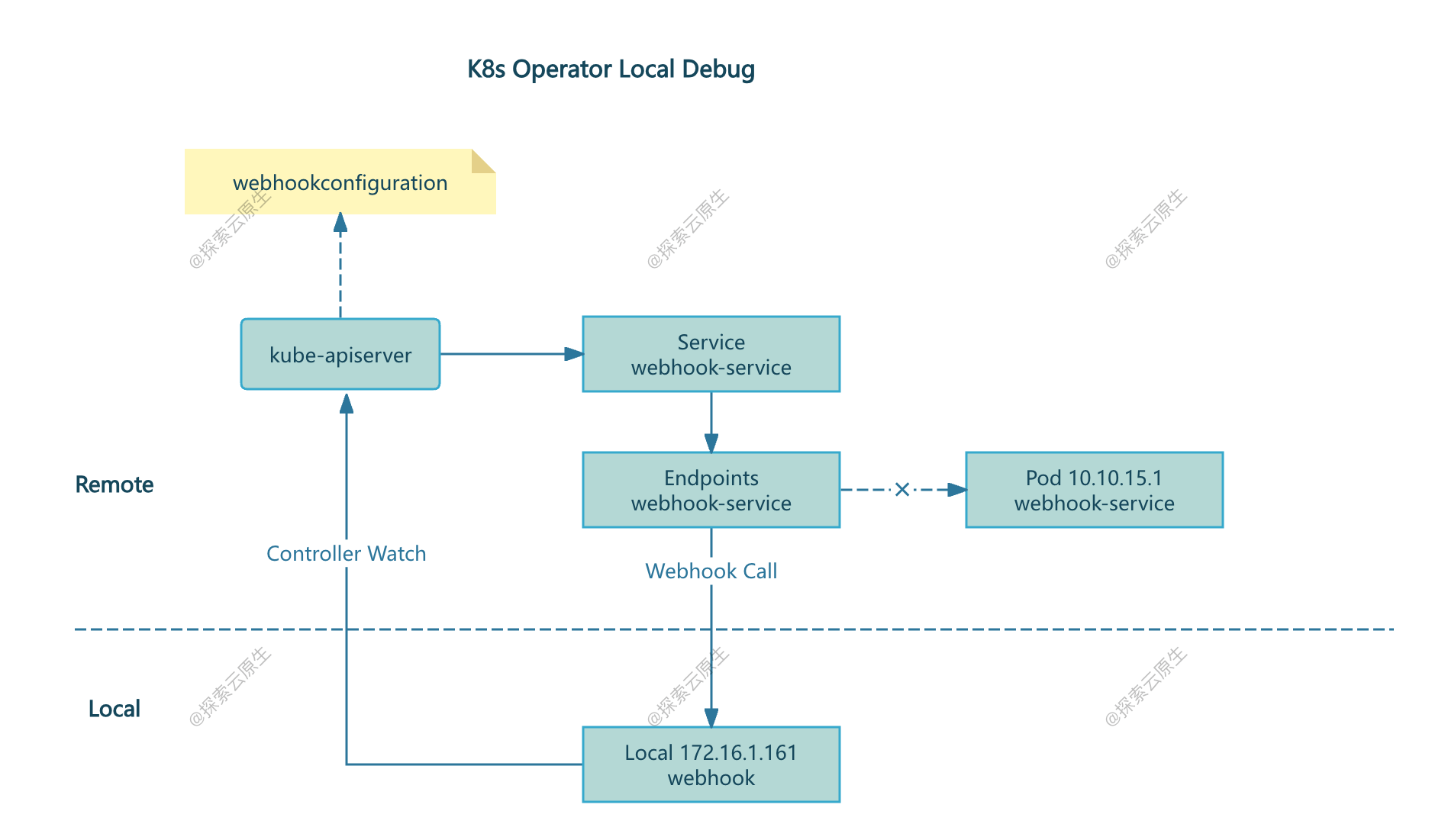

自定义 Endpoints 方式

既然 kube-apiserver 默认通过 Service 访问 Webhook,那我们就正常创建 Service,然后手动创建 endpoints 对象,ip 就填本地 IP,这样 kube-apiserver 通过 Service 访问,最终也可以转发到本地。

通过这种自定义 Endpoints 的方式实现 Webhook 本地调试。

举个例子🌰:

编辑 config/webhook/service.yaml

1

vi config/webhook/service.yaml

内容如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

apiVersion : v1

kind : Service

metadata :

name : webhook-service

namespace : test

spec :

ports :

- port : 443

protocol : TCP

targetPort : 9443

# selector:

# control-plane: controller-manager

---

apiVersion : v1

kind : Endpoints

metadata :

name : webhook-service

namespace : test

subsets :

- addresses :

- ip : 172.16.1.161

ports :

- port : 9443

protocol : TCP

两个注意点:

Endpoints 中的 IP 就是本地 IP,这样 kube-apiserver 使用该 Service 访问 Webhook 时就会被转发到本地的 Webhook 服务,访问路径如下:

SSH 远程端口转发

如果本地没有 IP,或者没有远程服务器可以直接访问的 IP,那么我们也可以通过 SSH 远程端口转发 实现。

例如:

1

ssh -N -R 192.168.95.145:9443:localhost:9443 root@192.168.95.145

通过以上命令,可以将远程服务器192.168.95.145:9443 端口转发到本地localhost:9443。

这样 Endpoint 中 IP 配置为 192.168.95.145 即可:

1

2

3

4

5

6

7

8

9

10

kind : Endpoints

metadata :

name : webhook-service

namespace : test

subsets :

- addresses :

- ip : 192.168.95.145

ports :

- port : 9443

protocol : TCP

最终 kube-apiserver 访问 ServiceIP,kube-proxy 会将其转发到我们配置的 Endpoint,即 192.168.95.145,然后 SSH 隧道再将其转发到我们本地,从而实现远程调试。

不过一般需要配置一下 GatewayPorts,GatewayPorts 控制反向隧道绑定的地址范围:

GatewayPorts no(默认值)反向隧道只绑定到远程主机的 localhost(127.0.0.1)。 无法通过远程主机的外网 IP 或其他接口访问。 GatewayPorts yes允许反向隧道绑定到远程主机的所有可用接口(包括外网 IP)。 结果:可以通过远程主机的指定 IP(如 192.168.95.145)或 0.0.0.0 访问反向隧道。 编辑 SSH 配置文件:

1

vi /etc/ssh/sshd_config

确保以下配置项设置为 yes:

重启 SSH 服务以应用更改:

接下来说一下,基于 Kubebuilder 我们需要做什么改动。

配置 Webhook Service

首先要做的自然是修改 Webhook 对应的 Service 配置。

1

vi config/webhook/service.yaml

两个注意点:

修改后完整 service.yaml 内容如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

apiVersion : v1

kind : Service

metadata :

labels :

app.kubernetes.io/name : i-operator

app.kubernetes.io/managed-by : kustomize

name : webhook-service

namespace : system

spec :

ports :

- port : 443

protocol : TCP

targetPort : 9443

# selector:

# control-plane: controller-manager

---

apiVersion : v1

kind : Endpoints

metadata :

name : webhook-service

namespace : system

subsets :

- addresses :

- ip : 172.16.1.161

ports :

- port : 9443

protocol : TCP

配置 CertManager 签发证书

由于 kube-apiserver 会使用 https 访问 Webhook,因此我们需要签发一个证书,给本地 Webhook 开启 TLS。

根据 kubebuilder 推荐的方案,使用 cert-manager 作为 webhook 的证书生成、管理工具。

集群中提前安装好 Cert-Manager,参考 cert-manager 安装文档 ,执行以下命令即可:

1

kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.16.2/cert-manager.yaml

Kubebuilder 也生成了 cert-manager 对应的配置,在config/certmanager 目录下 内容如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

# The following manifests contain a self-signed issuer CR and a certificate CR.

# More document can be found at https://docs.cert-manager.io

# WARNING: Targets CertManager v1.0. Check https://cert-manager.io/docs/installation/upgrading/ for breaking changes.

apiVersion : cert-manager.io/v1

kind : Issuer

metadata :

labels :

app.kubernetes.io/name : i-operator

app.kubernetes.io/managed-by : kustomize

name : selfsigned-issuer

namespace : system

spec :

selfSigned : {}

---

apiVersion : cert-manager.io/v1

kind : Certificate

metadata :

labels :

app.kubernetes.io/name : certificate

app.kubernetes.io/instance : serving-cert

app.kubernetes.io/component : certificate

app.kubernetes.io/created-by : i-operator

app.kubernetes.io/part-of : i-operator

app.kubernetes.io/managed-by : kustomize

name : serving-cert # this name should match the one appeared in kustomizeconfig.yaml

namespace : system

spec :

# SERVICE_NAME and SERVICE_NAMESPACE will be substituted by kustomize

dnsNames :

- SERVICE_NAME.SERVICE_NAMESPACE.svc

- SERVICE_NAME.SERVICE_NAMESPACE.svc.cluster.local

issuerRef :

kind : Issuer

name : selfsigned-issuer

secretName : webhook-server-cert # this secret will not be prefixed, since it's not managed by kustomize

不过这些配置,默认没有生效,为了让 cert-manager 部分配置生效,我们要做的就是修改 kustomize 的默认配置文件

1

vi config/default/kustomization.yaml

做以下修改:

1)namespace、namePrefix 根据实际情况修改即可

2)resources 部分,certmanager 默认是注释掉的,将其放开

至此,resources 一共包括 crd、rbac、manager、webook、certmanager、metrics_service.yaml 几部分 3)patches 部分,certmanager 对应的 replacements 默认也是注释掉的,将其放开

调整后的 config/default/kustomization.yaml 内容如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

# Adds namespace to all resources.

namespace : test

namePrefix : i-operator-

resources :

- ../crd

- ../rbac

- ../manager

- ../webhook

- ../certmanager

#- ../prometheus

- metrics_service.yaml

#- ../network-policy

patches :

- path : manager_metrics_patch.yaml

target :

kind : Deployment

- path : manager_webhook_patch.yaml

# [CERTMANAGER] To enable cert-manager, uncomment all sections with 'CERTMANAGER' prefix.

replacements :

- source : # Uncomment the following block if you have any webhook

kind : Service

version : v1

name : webhook-service

fieldPath : .metadata.name # Name of the service

targets :

- select :

kind : Certificate

group : cert-manager.io

version : v1

fieldPaths :

...

# 省略

至此,我们要修改的东西都处理好了。

将所有资源部署到集群

执行 make deploy 将使用 Kustomize 生成 yaml 并 apply 到集群中:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

❯ make deploy

/Users/lixueduan/17x/projects/i-operator/bin/controller-gen rbac:roleName= manager-role crd webhook paths = "./..." output:crd:artifacts:config= config/crd/bases11:28:46

cd config/manager && /Users/lixueduan/17x/projects/i-operator/bin/kustomize edit set image controller = controller:latest

/Users/lixueduan/17x/projects/i-operator/bin/kustomize build config/default | kubectl apply -f -

namespace/test unchanged

customresourcedefinition.apiextensions.k8s.io/applications.core.crd.lixueduan.com created

serviceaccount/i-operator-controller-manager unchanged

role.rbac.authorization.k8s.io/i-operator-leader-election-role unchanged

clusterrole.rbac.authorization.k8s.io/i-operator-application-editor-role unchanged

clusterrole.rbac.authorization.k8s.io/i-operator-application-viewer-role unchanged

clusterrole.rbac.authorization.k8s.io/i-operator-manager-role unchanged

clusterrole.rbac.authorization.k8s.io/i-operator-metrics-auth-role unchanged

clusterrole.rbac.authorization.k8s.io/i-operator-metrics-reader unchanged

rolebinding.rbac.authorization.k8s.io/i-operator-leader-election-rolebinding unchanged

clusterrolebinding.rbac.authorization.k8s.io/i-operator-manager-rolebinding unchanged

clusterrolebinding.rbac.authorization.k8s.io/i-operator-metrics-auth-rolebinding unchanged

endpoints/i-operator-webhook-service unchanged

service/i-operator-controller-manager-metrics-service unchanged

service/i-operator-webhook-service unchanged

deployment.apps/i-operator-controller-manager unchanged

certificate.cert-manager.io/i-operator-serving-cert unchanged

issuer.cert-manager.io/i-operator-selfsigned-issuer unchanged

mutatingwebhookconfiguration.admissionregistration.k8s.io/i-operator-mutating-webhook-configuration configured

validatingwebhookconfiguration.admissionregistration.k8s.io/i-operator-validating-webhook-configuration configured

这将部署前面 Kustomize Resource 中指定的资源:

CRD:当前项目创建的 Application 对象

RBAC:为下面的 Deployment 赋足够权限

Manager:以 Deployment 形式启动 Controller

CertManager:Certificate 和 Issuer 对象,用于签发证书

Webhook:WebhookConfiguration 以及一个修改过的 Service

Metrics Service:监控指标

部署之后集群中的 cert-manager 会自动根据 certificate 和 issuer 对象签发证书并写入 Secret,具体如下:

1

2

3

4

5

6

7

8

9

[ root@operator ~] # kubectl -n test get certificate

NAME READY SECRET AGE

i-operator-serving-cert True webhook-server-cert 41m

[ root@operator ~] # kubectl -n test get issuer

NAME READY AGE

i-operator-selfsigned-issuer True 41m

[ root@operator ~] # kubectl -n test get secret

NAME TYPE DATA AGE

webhook-server-cert kubernetes.io/tls 3 41m

同时由于 Kustomize 指定了 Annoation,cert-manager 还会给 WebhookConfig 自动注入 CA 信息,查看一下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

[ root@operator ~] # kubectl get MutatingWebhookConfiguration i-operator-mutating-webhook-configuration -oyaml

apiVersion: admissionregistration.k8s.io/v1

kind: MutatingWebhookConfiguration

metadata:

annotations:

cert-manager.io/inject-ca-from: test/i-operator-serving-cert

kubectl.kubernetes.io/last-applied-configuration: |

{ "apiVersion" :"admissionregistration.k8s.io/v1" ,"kind" :"MutatingWebhookConfiguration" ,"metadata" :{ "annotations" :{ "cert-manager.io/inject-ca-from" :"test/i-operator-serving-cert" } ,"name" :"i-operator-mutating-webhook-configuration" } ,"webhooks" :[{ "admissionReviewVersions" :[ "v1" ] ,"clientConfig" :{ "service" :{ "name" :"i-operator-webhook-service" ,"namespace" :"test" ,"path" :"/mutate-core-crd-lixueduan-com-v1-application" }} ,"failurePolicy" :"Fail" ,"name" :"mapplication-v1.lixueduan.com" ,"rules" :[{ "apiGroups" :[ "core.crd.lixueduan.com" ] ,"apiVersions" :[ "v1" ] ,"operations" :[ "CREATE" ,"UPDATE" ] ,"resources" :[ "applications" ]}] ,"sideEffects" :"None" }]}

creationTimestamp: "2024-12-31T04:36:16Z"

generation: 2

name: i-operator-mutating-webhook-configuration

resourceVersion: "2135996"

uid: eee0b7f6-b789-46b2-a343-f1a3c632067a

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

caBundle: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURFakNDQWZxZ0F3SUJBZ0lRUFM0d2tzU0M4dlRMVkJvd2o3YlV1REFOQmdrcWhraUc5dzBCQVFzRkFEQUEKTUI0WERUSTBNVEl6TVRBME16WXhObG9YRFRJMU1ETXpNVEEwTXpZeE5sb3dBRENDQVNJd0RRWUpLb1pJaHZjTgpBUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTHU3UXpKTjF6OUdTSFduRU9RQUZuK3NWeFNiSTVGY0lLMkhSY0ZpCnFYK0hDdFREL3VPZ3ByR2FpcG9GVDNPdGZjNnVGS0loK3FZTDlyK3pPcDdyTmtJV24zTkQ4djBuSStaSld0NGcKZk5IT3RSU2tVNFpESE5nam1hUUlaemhGWStkRHE5dklDVjZNSml6SVFGNHJ4ejBRREJiSTU5Uy9BcTR2QUFFZQpITEI1aEdnVU95ZnoydnpWaytHU3loSlVPaGZTTS8xaDltQzR0VUFOdWpoZlVGQVpaR29PbnN4VmVCclFwVDBHClFvT2tyOU51T3FjYzByL0VvQkpnMGl0L0xxQVJYanVKcGtxdnd1K2ZmamVLRnphTDMxd3JwcmNpSWRFbE1KdEoKRkUvNDFzT2xrU29aZ0FsMitFTERvdUhNU3JlUEdOMWxmZmpwQVk4TnJlQWZxK3NDQXdFQUFhT0JoekNCaERBTwpCZ05WSFE4QkFmOEVCQU1DQmFBd0RBWURWUjBUQVFIL0JBSXdBREJrQmdOVkhSRUJBZjhFV2pCWWdpTnBMVzl3ClpYSmhkRzl5TFhkbFltaHZiMnN0YzJWeWRtbGpaUzUwWlhOMExuTjJZNEl4YVMxdmNHVnlZWFJ2Y2kxM1pXSm8KYjI5ckxYTmxjblpwWTJVdWRHVnpkQzV6ZG1NdVkyeDFjM1JsY2k1c2IyTmhiREFOQmdrcWhraUc5dzBCQVFzRgpBQU9DQVFFQXEyR21YcGU2WnN0WnVUbjQvaGZxaXRONVU0bmdzM3BLamJ0WTBmY1lKVkI2SFZTYXJnS0NaSEwxCmJGU2YxWk96NS94WTZrZW0zWlRnbHdZMHRXZTI2dStFVTF4ckxCTXJEWGpnK0VIVlVyZTdWVnIwYTB3RTZvOVQKVFh2NVpqUDY0clVlT3o5TE1GZHU0Q3IzVHZPWEVVbGc3MU00MVR1U1JUQ1B3Wkl3NzRqU3A0QjA5Nm9iMFFEVQpkY090TVk4WTVwRlZnZEZLSk00QUlSdk94Ylp4WThlUFhENjhDTk5SVUF6bGNJdWk0VForWmhaMlpieVg0S3YzClZ3SnhWcmZqZTFMUlBCdHNVQ0l6VEE1Z09rZUhZRncrVmJQeWI1R1FZeVh6ZjMxOENodERJTVRML1RBZzc5cUkKNkJZdStHcFVFci9wTDR0S3lic2NyT3hHK0NmZjdBPT0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo =

service:

name: i-operator-webhook-service

namespace: test

path: /mutate-core-crd-lixueduan-com-v1-application

port: 443

可以看到,自动注入了 caBundle 字段,一切正常。

复制证书到本地

最后我们还需要把生成的 tls.key 和 tls.crt 文件复制到本地,用于在本地启动 Webhook 时使用证书。

首先从集群中拿到 cert-manager 自动签发的证书,并导入到对应目录。

1

2

3

4

# 从 Secret 中解析

mkdir -p /tmp/k8s-webhook-server/serving-certs

kubectl get secret -n test webhook-server-cert -o= jsonpath = '{.data.tls\.crt}' | base64 -d > /tmp/k8s-webhook-server/serving-certs/tls.crt

kubectl get secret -n test webhook-server-cert -o= jsonpath = '{.data.tls\.key}' | base64 -d > /tmp/k8s-webhook-server/serving-certs/tls.key

服务启动时,默认会到 <temp-dir>/k8s-webhook-server/serving-certs 目录下读取tls.crt 和 tls.key 两个文件,当然也可以修改,不过不推荐。

不过获取到的 <temp-dir> 会收到环境变量TMPDIR 影响,我的 Mac 下默认是

1

2

$ echo $TMPDIR

/var/folders/r5/vby20fm56t3g3bhydvcn897h0000gn/T/

不过只需要保证对应目录有证书文件即可。

本地启动 Controller

接下来就是本地启动 Controller 服务

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

❯ TMPDIR = /tmp make run

/Users/lixueduan/17x/projects/i-operator/bin/controller-gen rbac:roleName= manager-role crd webhook paths = "./..." output:crd:artifacts:config= config/crd/bases13:06:41

/Users/lixueduan/17x/projects/i-operator/bin/controller-gen object:headerFile= "hack/boilerplate.go.txt" paths = "./..."

go fmt ./...

go vet ./...

go run ./cmd/main.go

2024-12-31T13:06:56+08:00 INFO controller-runtime.builder Registering a mutating webhook { "GVK" : "core.crd.lixueduan.com/v1, Kind=Application" , "path" : "/mutate-core-crd-lixueduan-com-v1-application" }

2024-12-31T13:06:56+08:00 INFO controller-runtime.webhook Registering webhook { "path" : "/mutate-core-crd-lixueduan-com-v1-application" }

2024-12-31T13:06:56+08:00 INFO controller-runtime.builder Registering a validating webhook { "GVK" : "core.crd.lixueduan.com/v1, Kind=Application" , "path" : "/validate-core-crd-lixueduan-com-v1-application" }

2024-12-31T13:06:56+08:00 INFO controller-runtime.webhook Registering webhook { "path" : "/validate-core-crd-lixueduan-com-v1-application" }

2024-12-31T13:06:56+08:00 INFO setup starting manager

2024-12-31T13:06:56+08:00 INFO starting server { "name" : "health probe" , "addr" : "[::]:8081" }

2024-12-31T13:06:56+08:00 INFO controller-runtime.webhook Starting webhook server

2024-12-31T13:06:56+08:00 INFO setup disabling http/2

2024-12-31T13:06:56+08:00 INFO Starting EventSource { "controller" : "application" , "controllerGroup" : "core.crd.lixueduan.com" , "controllerKind" : "Application" , "source" : "kind source: *v1.Application" }

2024-12-31T13:06:56+08:00 INFO Starting EventSource { "controller" : "application" , "controllerGroup" : "core.crd.lixueduan.com" , "controllerKind" : "Application" , "source" : "kind source: *v1.Deployment" }

2024-12-31T13:06:56+08:00 INFO Starting Controller { "controller" : "application" , "controllerGroup" : "core.crd.lixueduan.com" , "controllerKind" : "Application" }

2024-12-31T13:06:56+08:00 INFO controller-runtime.certwatcher Updated current TLS certificate

2024-12-31T13:06:56+08:00 INFO controller-runtime.webhook Serving webhook server { "host" : "" , "port" : 9443}

2024-12-31T13:06:56+08:00 INFO controller-runtime.certwatcher Starting certificate watcher

2024-12-31T13:06:56+08:00 INFO Starting workers { "controller" : "application" , "controllerGroup" : "core.crd.lixueduan.com" , "controllerKind" : "Application" , "worker count" : 1

当然了也可以直接以 Debug 方式启动,打断点进行调试。

4.测试

Webhook 测试

异常 Application

创建一个异常的 Application 对象,image 字段为空

1

2

3

4

5

6

7

8

9

10

cat <<EOF | kubectl apply -f -

apiVersion: core.crd.lixueduan.com/v1

kind: Application

metadata:

name: validate

namespace: default

spec:

enabled: true

image: ''

EOF

理论上,我们的 Validate Webhook 会将其拦截掉,错误信息如下:

1

2

3

4

5

6

7

8

9

10

11

❯ cat <<EOF | kubectl apply -f -

apiVersion: core.crd.lixueduan.com/v1

kind: Application

metadata:

name: validate

namespace: default

spec:

enabled: true

image: ''

EOF

Error from server ( Forbidden) : error when creating "STDIN" : admission webhook "vapplication-v1.lixueduan.com" denied the request: invalid image name:

可以看到,请求被拦截了,Application 没能成功创建,符合条件。也就是我们的 Webhook 这个逻辑生效了

1

2

3

4

5

6

7

8

9

10

11

12

13

14

// ValidateCreate implements webhook.CustomValidator so a webhook will be registered for the type Application.

func ( v * ApplicationCustomValidator ) ValidateCreate ( ctx context . Context , obj runtime . Object ) ( admission . Warnings , error ) {

application , ok := obj .( * corev1 . Application )

if ! ok {

return nil , fmt . Errorf ( "expected a Application object but got %T" , obj )

}

applicationlog . Info ( "Validation for Application upon creation" , "name" , application . GetName ())

if ! isValidImageName ( application . Spec . Image ) {

return nil , fmt . Errorf ( "invalid image name: %s" , application . Spec . Image )

}

return nil , nil

}

正常 Application

创建一个简单的 Application 对象:

1

2

3

4

5

6

7

8

9

10

11

cat <<EOF | kubectl apply -f -

apiVersion: core.crd.lixueduan.com/v1

kind: Application

metadata:

name: demo

namespace: default

spec:

enabled: true

image: 'nginx:1.22'

EOF

application.core.crd.lixueduan.com/demo created

可以看到,是能够正常创建的。

Controller 测试

之前测试 Webhook 时,我们创建了一个名为 demo 的 Application。

按照 Controller 中的逻辑,我们创建 Application 对象之后,Controller 会创建一个对应的 Deployment,镜像则是 Spec.Image 中指定的值。

并在 Deployment Ready 之后将 Application Status.Ready 也修改为 True。

查看 Controller 日志:

1

2

3

4

5

2024-12-19T14:07:37+08:00 INFO reconcile application { "app" : { "name" :"demo" ,"namespace" :"default" }}

2024-12-19T14:07:37+08:00 INFO new app,add finalizer { "app" : { "name" :"demo" ,"namespace" :"default" }}

2024-12-19T14:07:37+08:00 INFO reconcile application { "app" : { "name" :"demo" ,"namespace" :"default" }}

2024-12-19T14:07:37+08:00 INFO reconcile application create deployment { "app" : "default" , "deployment" : "app-demo" }

2024-12-19T14:07:38+08:00 INFO sync app status { "app" : { "name" :"demo" ,"namespace" :"default" }}

可以看到,Controller 已经感知到了 Application 的变化,正在执行调谐逻辑,同时 app 对应的 Deployment 也成功创建出来了。

1

2

3

4

5

6

7

8

9

[ root@operator ~] # kubectl get applications

NAME AGE

demo 109s

[ root@operator ~] # kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

app-demo 1/1 1 1 111s

[ root@operator ~] # kubectl get po

NAME READY STATUS RESTARTS AGE

app-demo-86b66c84cd-cztk8 1/1 Running 0 3m13s

现在 Deployment 已经 Ready,看下 Application 状态是否更新

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

[ root@operator ~] # kubectl get applications demo -oyaml

apiVersion: core.crd.lixueduan.com/v1

kind: Application

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{ "apiVersion" :"core.crd.lixueduan.com/v1" ,"kind" :"Application" ,"metadata" :{ "annotations" :{} ,"name" :"demo" ,"namespace" :"default" } ,"spec" :{ "enabled" :true,"image" :"nginx:1.22" }}

creationTimestamp: "2024-12-19T06:07:36Z"

finalizers:

- lixueduan.com/application

generation: 1

name: demo

namespace: default

resourceVersion: "298825"

uid: de7e4830-0ae7-4349-add1-50349be28ade

spec:

enabled: true

image: nginx:1.22

status:

ready: true

可以看到,status.ready 也设置为 true 了。

更新 Application

接下来我们更新一下 Application 中的 Image 字段,看下 Controller 能否正常同步。

1

2

3

4

5

6

7

8

9

10

cat <<EOF | kubectl apply -f -

apiVersion: core.crd.lixueduan.com/v1

kind: Application

metadata:

name: demo

namespace: default

spec:

enabled: true

image: 'nginx:1.23'

EOF

Controller 日志如下:

1

2

3

2024-12-19T14:17:46+08:00 INFO reconcile application update deployment { "app" : "default" , "deployment" : "app-demo" }

2024-12-19T14:17:46+08:00 INFO sync app status { "app" : { "name" :"demo" ,"namespace" :"default" }}

2024-12-19T14:17:46+08:00 INFO reconcile application { "app" : { "name" :"demo" ,"namespace" :"default" }}

Controller 感知到了 Application 的变化,并更新了 Deployment,查看 Deployment 信息确认一下:

1

2

3

[ root@operator ~] # kubectl get deploy app-demo -oyaml|grep image

- image: nginx:1.23

imagePullPolicy: IfNotPresent

删除 Application

最后则是测试一下删除逻辑,当我们删除 Application 对象之后,Controller 需要删除关联的 Deployment 对象。

1

kubectl delete applications demo

Controller 日志如下:

1

2

3

2024-12-19T14:19:51+08:00 INFO reconcile application { "app" : { "name" :"demo" ,"namespace" :"default" }}

2024-12-19T14:19:51+08:00 INFO app deleted, clean up { "app" : { "name" :"demo" ,"namespace" :"default" }}

2024-12-19T14:19:51+08:00 INFO reconcile application delete deployment { "app" : "default" , "deployment" : "app-demo" }

看起来一切正常,确认 Deployment 是否真的删除了

1

2

[ root@operator ~] # kubectl get deploy

No resources found in default namespace.

可以看到,Deployment 也被删了,说明我们的 Controller 功能一切正常。

至此,我们的 Operator 基本开发调试完成,接下来要做的就是构建 Controller 镜像,将其真正部署到集群里。

5.部署

之前是本地运行,要部署到集群,则是先将 Controller 构建成镜像。

构建镜像

也很简单,Kubebuilder 在初始化时都准备好了,直接执行 make docker-buildx 命令就好。

会使用 Docker Buildx 构建多架构镜像,因此需要准备好 Buildx 环境。

1

IMG = lixd96/controller:latest PLATFORMS = linux/arm64,linux/amd64 make docker-buildx

源码托管在 Github 上,准备了一个 Workflow buildah-build.yaml ,每次提交后自动构建镜像并推送到 Dockerhub。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

name: Build and Push Multi-Arch Image

on:

push:

env:

IMAGE_NAME: i-operator

IMAGE_TAG: latest

IMAGE_REGISTRY: docker.io

IMAGE_NAMESPACE: lixd96

jobs:

build:

name: Build and Push Multi-Architecture Image

runs-on: ubuntu-20.04

steps:

# Checkout the repository

- name: Checkout repository

uses: actions/checkout@v2

# Set up QEMU for cross-platform builds

- name: Set up QEMU for multi-arch support

uses: docker/setup-qemu-action@v1

# Build the Docker image using Buildah

- name: Build multi-architecture image

id: build-image

uses: redhat-actions/buildah-build@v2

with:

image: ${ { env.IMAGE_NAME } }

tags: ${ { env.IMAGE_TAG } }

archs: amd64,ppc64le,s390x,arm64 # Specify the architectures for multi-arch support

dockerfiles: |

./Dockerfile

# Push the built image to the specified container registry

- name: Push image to registry

id: push-to-registry

uses: redhat-actions/push-to-registry@v2

with:

image: ${ { steps.build-image.outputs.image } }

tags: ${ { steps.build-image.outputs.tags } }

registry: ${ { env.IMAGE_REGISTRY } } /${ { env.IMAGE_NAMESPACE } }

username: ${ { secrets.REGISTRY_USERNAME } } # Secure registry username

password: ${ { secrets.REGISTRY_PASSWORD } } # Secure registry password

# Print the image URL after the image has been pushed

- name: Print pushed image URL

run: echo "Image pushed to ${ { steps.push-to-registry.outputs.registry-paths } }"

生成部署 yaml

真正将 Controller 部署到集群时,一般使用 Deployment 形式部署。

运行make build-installer 即可生成 CRD 以及 部署 Controller 的 Deployment 对应的 Yaml。

不过之前需要把 Webhook 调试时注释掉的 spec.selector 部分字段放开,并注释掉 Endpoints 对象。

1

vi config/webhook/service.yaml

修改后,内容如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

apiVersion : v1

kind : Service

metadata :

labels :

app.kubernetes.io/name : i-operator

app.kubernetes.io/managed-by : kustomize

name : webhook-service

namespace : system

spec :

ports :

- port : 443

protocol : TCP

targetPort : 9443

selector :

control-plane : controller-manager

---

#apiVersion: v1

#kind: Endpoints

#metadata:

# name: webhook-service

# namespace: system

#subsets:

# - addresses:

# - ip: 172.16.1.161

# ports:

# - port: 9443

# protocol: TCP

然后再执行命令生成 install.yaml:

1

2

3

4

5

6

❯ IMG = lixd96/controller:latest make build-installer

/Users/lixueduan/17x/projects/i-operator/bin/controller-gen rbac:roleName= manager-role crd webhook paths = "./..." output:crd:artifacts:config= config/crd/bases14:48:30

/Users/lixueduan/17x/projects/i-operator/bin/controller-gen object:headerFile= "hack/boilerplate.go.txt" paths = "./..."

mkdir -p dist

cd config/manager && /Users/lixueduan/17x/projects/i-operator/bin/kustomize edit set image controller = lixd96/controller:latest

/Users/lixueduan/17x/projects/i-operator/bin/kustomize build config/default > dist/install.yaml

最终生成的 dist/install.yaml 就包含了部署 Operator 所需要的多有资源,部署时 apply 该文件即可。

至此,Operator 开发调试部署全流程都完成。

6.小结

K8s Operator 开发本地调试:

准备一个集群,并且实现本地连接并执行 kubectl 命令。

Controller 调试比较简单 :

增加 Webhook 后则多了证书相关配置 :

复杂点在于 Webhook 是由集群中的 kube-apiserver 调用,在不破坏原有 Service 访问方式上,通过自定义 Endpoints 实现将请求转发到本地。

K8s Operator 生产部署 :

构建镜像:make docker-buildx 生成部署 manifest:make build-installer

kube-apiserver 根据配置,通过 Service 域名进行访问,我们把 Service 对应的 Endpoints 手动改成本地 IP,这样就能请到本地的 Webhook 了。

kube-apiserver 根据配置,通过 Service 域名进行访问,我们把 Service 对应的 Endpoints 手动改成本地 IP,这样就能请到本地的 Webhook 了。